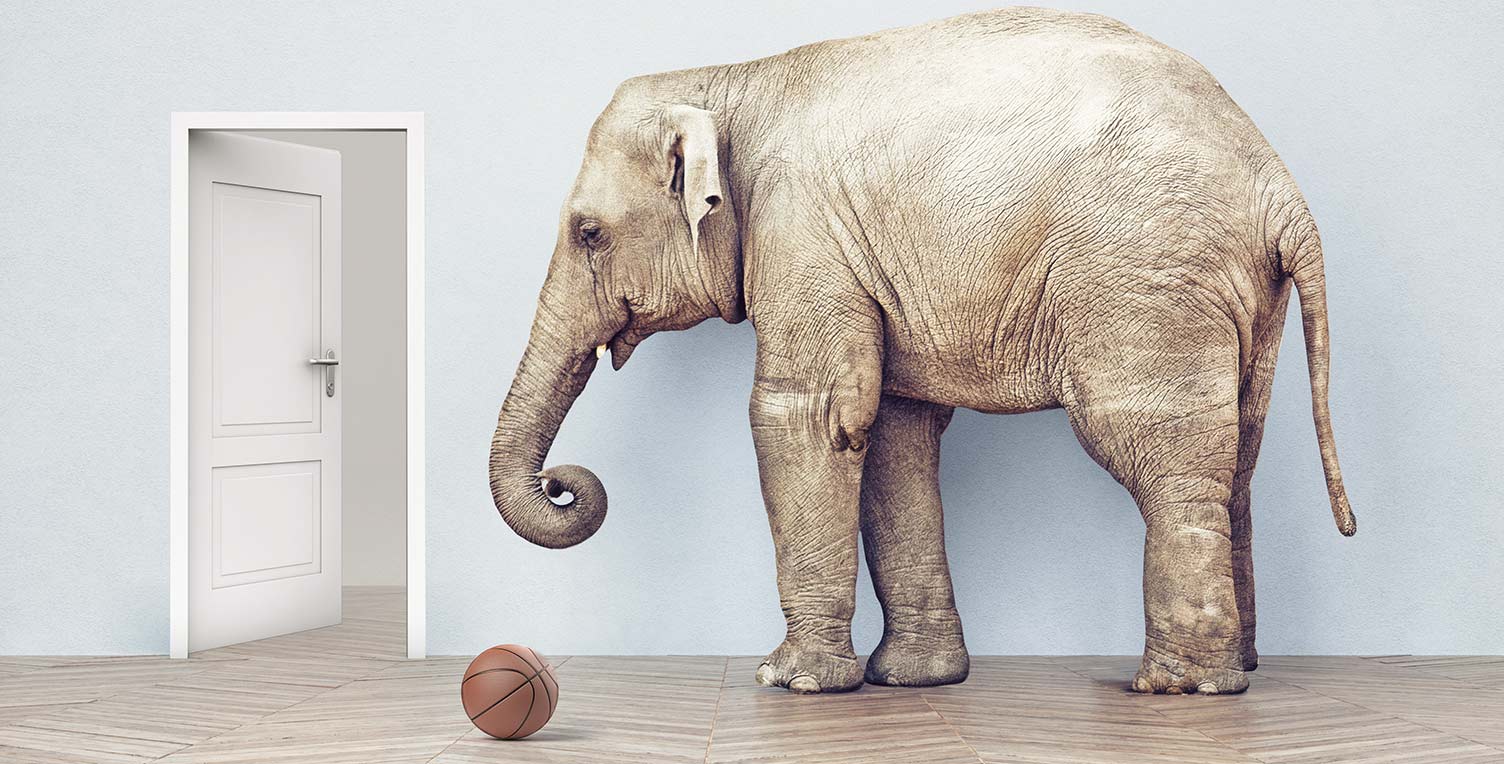

When trying to test whether or not a machine has common sense, you might think to ask it a simple question like "Can an elephant fit through a doorway?" Such intuitive explorations can prove immensely useful; however, machines can often find the right answer for the wrong reason. Thus, defining what it means for machines to have common sense is one of the primary challenges in the area. As part of our work, we're invested in exploring and iterating on a strong suite of core commonsense tasks against which we can measure progress.